On the 24th of April Evidence Based Education and ASCL delivered an assessment conference with a difference.

Not once during the day did any presenter or workshop leader talk about ‘data drops’ or tracking systems. And that felt good. It felt good because the focus was on the purposes of assessment, assessment as pedagogy, the efficiency and reliability of assessment, and making assessment more manageable, meaningful and motivating.

Obviously, we’re biased, but the quality of the sessions was excellent. We heard from Daisy Christodoulou of No More Marking, the Institute of Teaching’s Harry Fletcher-Wood, Lea Valley Primary School’s Allana Gay, Sarah Cunliffe and Leon Walker from Meols Cop Research School and our very own Ourania Ventista and Stuart Kime. But one session in particular has inspired this post. Phil Stock, Assistant Head of Greenshaw High School, ran a session on assessment reliability – ‘How reliable are your assessments and how do you know?’

Phil is a great example of a brave senior leader who’s prepared to lift the lid on the ‘black box’ of assessment in the name of teaching and learning. He’s consciously opted to challenge his understanding and to invest time in getting to grips with a complex area – the area where most improvement is required in Initial Teacher Training provision, according to the Carter Review of Initial Teacher Training (2015). An area in which the Commission on Life Without Levels reported the quality of training in England is currently ‘far too weak’. (That was at the time of writing – things have improved since. See the Assessment Lead Programme).

So, how reliable are your assessments, and how do you know?

That’s not a rhetorical question. Think of an assessment you’ve created or used in school – maybe a formative quiz or end-of-topic assessment – and then ask yourself or your colleagues: do we know anything about the reliability – the dependability – of the information we get from that assessment? About the overall quality of that information? Or about the effectiveness of each individual question? (Not all questions are created equal!).

Reliability in assessment is about consistency and accuracy. No assessment is 100% reliable, but it’s a concept that can be presented as a value – a value which provides us with an indicator of quality. Outside the classroom, we rely on quality indicators all the time. Who these days chooses a holiday, product or restaurant without checking out its ratings to inform that choice? We don’t think twice about using such indicators, yet barely anyone I ask can provide a robust answer to the question posed by Phil. Rather, the typical response is something along the lines of “Yeah, pretty good.”

To which we respond, “Great, what are you getting? 0.75? 0.79?”

This normally elicits a furrowed brow. Yes, it’s a loaded question that doesn’t win you many friends, but it helps make an important point.

As Daisy Christodoulou says in her book, Making Good Progress, “the lower the reliability of our test, the less valid are our inferences”. Given that information from assessments is used to make judgements and decisions about the needs and progress of pupils, shouldn’t schools be able to answer the question “How reliable is your assessment?”

How can you derive assessment reliability, and how can it be improved?

There are lots of factors which contribute to the reliability of an assessment, but two of the most critical for teachers to acknowledge are:

- the precision of the questions and tasks used in prompting students’ responses; and

- the accuracy and consistency of the interpretations derived from the information an assessment produces.

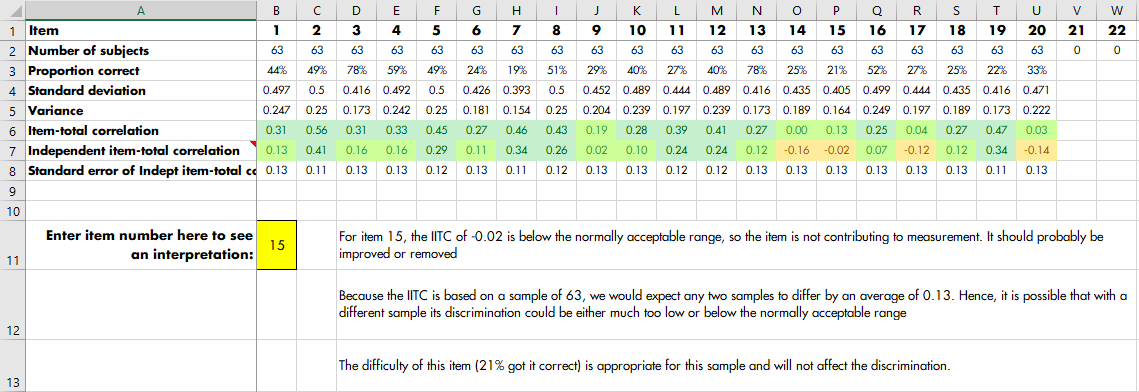

In his school, Phil Stock has used tools that provide measures of reliability – tools like the reliability calculator available through the Assessment Lead Programme. In the name of unbiased reporting, it’s important to point out that other tools are available(!), but using this one in particular involves a simple process of copying and pasting your assessment data (the responses from the assessment) into the calculator which then provides statistical and textual analyses about the overall reliability and the effectiveness of individual questions. With an indicator of the effectiveness of individual questions, and a prior understanding of how to write good questions (a prerequisite of being able to access the calculator), weaker questions can be removed or refined to improve overall reliability. Essentially, you are using analytics to fine-tune your instrument.

An example of item-level (i.e., question-level) analysis from the reliability calculator. We can see that the advice on Question 15 is to remove or improve the question.

Skills worth having

Designing effective, efficient questions and assessment processes for different students at different points in time is a set of skills to be honed. But, with that initial investment, it is one that can pay repeated dividends to teachers – and students – when high-quality questions and assessments are banked and shared, improved assessment efficiency within phases and departments can, in turn, lead to whole-school improvement.

To quote Daisy again: “Reliability is not some pettifogging or pedantic requirement: it is an aspect of validity, and a prerequisite for validity [of our inferences].”

Assessment is inextricable from teaching, and the quality of the latter is – in many ways – dependent on the quality of information derived from the former. So let’s get an answer to that question…